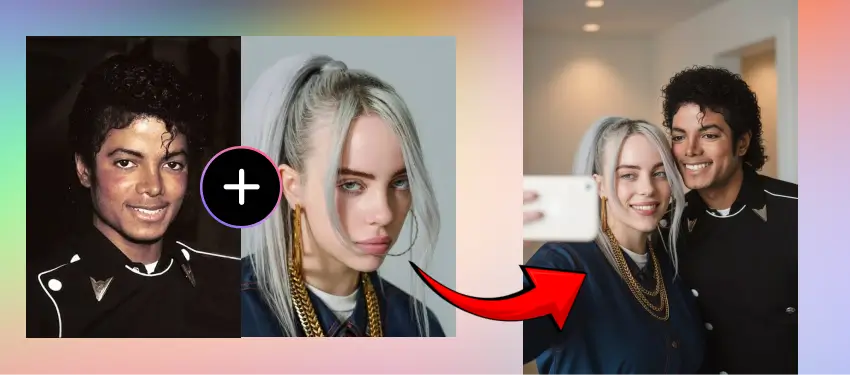

In the fast-paced world of generative AI, new tools appear almost every month, but only a few truly change how creators work. One of the most talked-about models today is Nano-Banana, an advanced AI image editing system believed to be developed by Google researchers. First spotted in LMArena’s Image Edit Arena, Nano-Banana has quickly become the subject of speculation, excitement, and high expectations.

What Is Google Nano-Banana?

Google Nano-Banana is an advanced AI image editor that allows one to edit their pictures with the help of natural language instructions. Rather than using layers, brushes, or masks, you as a user define what you want to be edited, and the model intelligently brings the changes to the scene preserving coherence.

For example:

- “Replace the background with a sunset beach.”

- “Turn the character’s outfit into medieval knight armor.”

- “Add softer lighting on the left side.”

Nano-Banana doesn’t just generate random results; it maintains perspective, lighting, and object consistency in a way most other models struggle with.

Story Behind Google Nano Banana

Unlike many Google products that arrive with press releases, Nano-Banana quietly appeared in LMArena, a competitive platform where AI models are tested. Early users noticed its precision in handling complex, multi-step edits and began sharing results online.

While Google hasn’t officially confirmed its release, experts believe Nano-Banana could be an experimental sibling of Imagen or Gemini Vision. Its codename “Nano-Banana” is likely temporary and used for internal testing.

Key Features & Strengths of Nano-Banana

- Exceptional Prompt Understanding

Handles complex instructions, such as replacing multiple characters in one scene while keeping lighting and context intact. - One-Shot Editing Consistency

Modifies specific areas of an image while preserving background details and scene coherence. - Versatile Styles

From photorealistic renders to stylized character art, Nano-Banana can adapt to different visual aesthetics. - Identity Preservation

Objects and characters maintain consistent identity across multiple edits which is ideal for branding and storytelling. - Built-In Safety & Provenance

Google has embedded invisible provenance signals to track AI-generated edits, ensuring authenticity and reducing misuse.

How Does Google Nano-Banana Work?

Nano-Banana is strongest in the sense that it has a multimodal vision-language structure. Here is a break down of how it works:

Visual-Language Encoders: A Visual-language encoder places the relevant text in the corresponding regions of the image. As an example, when you tell the model to “soften the lighting on the left side,” the model can pick out that specific area.

Layered Editing Process:Instead of changing the whole image in one go, Nano-Banana fine-tunes edits step by step. This layered method makes the final result look more polished and lifelike.

Outpainting:With this feature, you can extend or reshape parts of an image while keeping the lighting, perspective, and symmetry consistent, so the final edit blends in seamlessly.

Invisible Tracking Signals: Every edit embeds subtle provenance markers, verifying the content’s AI origin which is a step toward ethical AI art.

Safety Filters: Google has established policies to filter out the unsafe, harmful or bias generations and make it safe by design.

This will separate Nano-Banana with the models such as Stable Diffusion or MidJourney where it is common practice to use manual masking or external refinement tools.

Limitations of Google Nano Banana

Like every AI tool, Nano-Banana isn’t flawless. Early tests suggest:

Some visual bugs with reflections and placement of objects

The text rendering problem (letters do not line up normally, like in Stable Diffusion and MidJourney)

Anatomical errors, especially with complex hand positions or faces

Still, these limitations are relatively minor compared to its groundbreaking strengths.

Where Can You Use Google Nano-Banana?

Currently, Nano-Banana is in limited preview and not publicly available. Access appears restricted to AI testing platforms such as:

- LMArena’s Image Edit Arena: Where the model was first spotted.

- Sandbox Research Communities: Selected testers and researchers have shared edits on forums like r/LocalLLaMA.

- Experimental Google Ecosystem: Rumors suggest it could eventually join the Gemini AI suite or integrate with Flux AI, which already hosts several advanced editing tools.

At present, there is no public API, official download, or open-access platform for Nano-Banana. If Google moves forward with a release, we expect it to appear through its official AI research channels or as a feature inside Google Photos, Google Design tools, or even Android apps.

Read: Google Launches VEO 3: New AI Video Tool to Create Videos from Text and Images

Nano-Banana Importance for Creators

Nano-Banana could fundamentally change the creative workflow:

| Aspect | Impact on Creators |

| No technical barriers | Enables anyone to produce professional-level edits without needing Photoshop expertise. |

| Faster creativity | Designers can instantly experiment and refine their ideas with ease. |

| Brand consistency | Maintains uniform characters, colors, and styles across campaigns and projects. |

| Automation potential | Likely integration with Google’s ecosystem for streamlined creative workflows. |

If and when Google officially releases Nano-Banana, it could reshape not just AI art but the entire creative workflow for digital media. Until then, the buzz around this “banana” is only going to grow.